Practical Cache Coherence¶

Version History

| Date | Description |

|---|---|

| Jul 2, 2021 | add implication discussion |

| Feb 24, 2020 | Kobe and Gigi. Add Intel CCIP. |

| Oct 3, 2019 | Add FPGA related discussion |

| Jun 28, 2019 | Initial draft |

TL;DR. This is a note on how cache coherence protocols are implemented in real hardware. This note is NOT just about acadamic new ideas.

I started this when I was having a hard time optimizing lock delegation,

which relies on fine-tuning cache coherence to achieve extremely fast locks.

Summary and Thoughs¶

The textbooks tough us the basic concept of MESI. And specific algorithms like snoop and directory. What usually missing is the implementation details when it comes to: 1) how to solve conflicts, 2) what if there is no single shared bus.

Modern processors have Network-on-Chip (NoC). Within the die, cores, cache slices (large LLC is usually divided into slices), memory controllers and other components are connected via an on-chip network (e.g., a ring in old-generation Xeon, or a mesh in Xeon Scalable). This architecture is no different from connecting a set of distributed servers. The NoC has its own protocol (I guess ranging from L1 to L3, must be reliable data delivery). And all on-chip components communicate by sending/receiving packets. There is no central coordinator.

What this means for cache coherence?

Cache requests generated by MESI protocols should appear atomic to requesting cores. Given the distributed nature of all resources (via NoC), those cache requests are implemented like distributed transactions! This brings great complexity to a performant and correct cache coherence implementation. Hardware is much much faster than a set of distributed nodes. Besides, it cannot implement arbitrary logic, whatever protocol they design has a tight time budget.

For example, Intel uses the MESIF cache coherence protocol. In their implementation, when a core made a read to an invalid line, the corresponding cache will perform a cache read transaction to get the data from either other caches or memory. This transaction consists of multiple steps, such as: send requests, collect responses, and finally send ACKs.

Those transactions will conflict if multiple reads and writes happen at the same time. Someone has to resolve it. It can be resolved by different cache controllers, or by a single serialization point like home agent.

Just like you can have many ways to implement transactions for distributed systems, there are also many ways to do cache coherence transactions. And there are many.

Atomic Read-Modify-Write (RMW) instructions will make cache coherence

implementations even more complex. Those instructions include

read-and-inc, test-and-set, and lock;-prefixed.

I think, there will some “lock the bus”, or “locked state” at the

home agent per cache line. Having atomic RMW instructions

will add more complexity to the overall transaction design.

While reading Intel related cache coherence diagrams/transactions, you might find many different descriptions. Don’t panic. They are just different implementations proposed by Intel. Different implementations will have different trade-offs and performance, you can check Frank’s post for more details.

Directory-based cache coherence protocol and implementation will be the future for multicore machines. Because it incurs much less coherence traffic than snoop-based ones, thus more scalable. The trend is confirmed by recent Intel UPI directory-based approach.

Related readings:

- [1]: Why On-Chip Cache Coherence Is Here to Stay

- [2]: QPI 1.1 Invovled

- [3]: Paper: Multicast Snooping: A New Coherence Method Using a Multicast Address Network, ISCA ‘99

- [4]: Paper: Using Destination-Set Prediction to Improve the Latency/Bandwidth Tradeoff in Shared-Memory Multiprocessors, ISCA‘03

- [5]: The trade-off:

Left questions: - Do cache coherence implementations ensure fairness among cores?

Implication for Synchronization¶

Synchronization code (e.g., locking code) has deep relation with cache coherence and memory consistency. A lot optimizations are playing with certain cache coherence protocols. For example, a locking primitive may want to first read the lock then try to atomically change its value. By doing so, the first read will not trigger any cache invalidation to other cores, hence save latency and on-chip bandwidth.

As for memory consistency, it affects how many barriers should be inserted and whether a release/acquire semantic should be used.

Anyway, if you are implementing synchronization primitive, pay attention to cache coherence. Read the discussion posted by Dr. Bandwidth, especially this one on cache coherence flows on a procuder-consumer case. It is very useful if you are trying to implement high-performance spinlocks and concurrency data structures.

Implication for On-Chip Bandwidth and NUMA¶

Cache coherence consumes a LOT on-chip bandwidth. Think about all the messages it will send for a single transation. And this transaction can be easily triggered by normal read/write. So the cache coherent protocol end up using a lot on-chip bandwidth.

This is problematic for two reasons: a) the NoC bus/ring is shared. It will affect other traffic hence performance. b) In a NUMA architecture, the interconnection between CPU dies is responsible for a lot things like data transfer, cache coherence traffic, and other misc traffic. If cache coherence uses a lot, there is little room for others.

Many have complained this.

So what’s the mitigation? The first try is to move from Snoop to Directory so to avoid excessive traffic. Some sort of centralized coordinator could resolve conflicts therefore save tons of traffic. Intel’s cache coherence protocol has involved for this direction as well. Other measures? I don’t know.

Readings¶

- The Architecture of the Nehalem Processor and Nehalem-EP SMP Platforms, chapter 5.2 Cache-Coherence Protocol for Multi-Processors.

- Intel: Performance Analysis Guide for Intel® Core™ i7 Processor and Intel® Xeon™ 5500 processors

- Dr.Bandwidth on Core2Core cache coherence flows when running producer-consumer type of workload.. 100% recommended.

- Blog: NUMA Deep Dive Part 3: Cache Coherency

- The BEST blog I’ve seen on the topic of

Intel snoop models. - Intel is using MESIF cache coherence protocol, but it has multiple cache coherence implementations.

The first one is

Source Snoop(orEarly Snoop), which is more like a traditional snoop-based cache coherence implementation. Upon miss, the caching agent will broadcast to other agents. The second one isHome Snoop, which is more like a directory-based cache coherence implementation. Upon miss, the caching agent will contact home agent, and then the home agent will send requests to other caching agents who have the requested cache line. There are other implementations like Cluster-on-Die. Intel UPI gets rid of all this complexity, it is only using directory-based, in the hope to reduce cache coherence traffic, which make sense. - Related: Broadwell EP Snoop Models

- Related: Skylay UPI

- The BEST blog I’ve seen on the topic of

- Paper: MESIF: A Two-Hop Cache Coherency Protocol for Point-to-Point Interconnects (2009)__

- A MUST read.

- This paper has the most extensive description of the MESIF protocol implementation.

It has many

timing diagramsthan describe how cache requests actually proceed. Those diagrams can help us understand what is needed to finish a cache request. - Their slides has more timing diagrams.

- But do note: the implementation described by this paper is different from what Intel QPI has in products. The difference is discussed at chapter 4. MESIF and QPI, namely, other caching agents will send responses to Home agent rather than to requesting agent. QPI relies on Home agent to solve conflict.

- Also note: this is just one of the possible implementations to realize MESIF protocol. There could be many other ways, e.g., QPI source snooping, QPI home snooping. But all of them share the essential and general concepts and ideas.

- Appendix I: Large-Scale Multiprocessors and Scientific Applications,

chapter 7 Implementing Cache Coherence.

- This is probably some most insightful discussion about real implementation of cache coherence. With the distributed nature and Network-on-Chip, implementing cache coherence in modern processors is no different than implementing a distributed transaction protocol.

- Cache activities like read miss or write miss have multi-step operations, but they need to appear as “atomic” to users. Put in another way, misses are like transactions, they have multiple steps but they must be atomic. They can be retried.

- Having directory for cache coherence will make implementation easier. Because the place (e.g., L3) where directory resides can serve as the serialization point. They can solve write races.

Home directory controllerandcache controllerwill exchange messages like a set of distributed machines. In fact, with NoC, they are actually distributed system.

- Intel: An Introduction to the Intel® QuickPath Interconnect,

page 15 MESIF.

- HotChips slide, has timing diagrams.

- It explains the

Home SnoopandSource Snoopused by Intel. - Based on their explanation, it seems both

Home SnoopandSource Snoopare using a combination of snoop and directory. The Processor#4 (pg 17 and 18) maintains the directory. - And this is a perfect demonstration of the details described in Appendix I: Large-Scale Multiprocessors and Scientific Applications.

- Related patent: Extending a cache coherency snoop broadcast protocol with directory information

- Paper: Multicast Snooping: A New Coherence Method Using a Multicast Address Network, ISCA ‘99

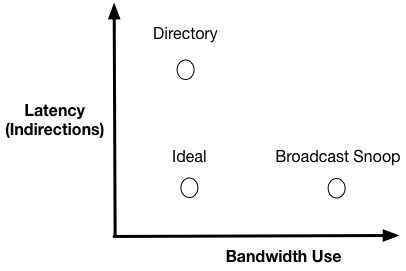

- A hybrid snoop and directory cache coherence implementation. The insight is snoop cause too much bandwidth, directory incurs longer latency.

- So this paper proposed

Multicast snoop, where it multicasts coherence transactions to selected processors, lowering the address bandwidth required for snooping.

- Paper: Why On-Chip Cache Coherence Is Here to Stay, Communications of ACM‘02

- This paper discusses why cache coherence can scale. A nice read.

- R1: Coherence’s interconnection network traffic per miss scales when precisely tracking sharers. (Okay increased directory bits, what about those storage cost? See R2).

- R2: Hierarchy combined with inclusion enables efficient scaling of the storage cost for exact encoding of sharers.

- R3: private evictions should send explicit messages to shared cache to enable precise tracking. Thus the recall (back invalidation) traffic can be reduced when shared cache is evicting (assume inclusion cache).

- R4: Latencies of cache request can be amortized.

- Book: Parallel Computer Organization and Design, Chapter 7.

- Links coherence and consistency together. This chapter uses detailed graphs to show how different cache coherence implementations affect consistency.

- Book: A Primer on Memory Consistency and Cache Coherence

- Best book for this topic.

- Dr.Bandwidth on explaining core-to-core communication transactions!

- Seriously, it’s so good!

- Although, I just feel there are so many unpublished details about the exact coherence transactions. Dr.Bandwidth himself used a lot “maybe”, and listed possible actions.

- Transactional Memory Coherence and Consistency, ISCA‘04

- Programming with Transactional Coherence and Consistency (TCC)

- Slide1

- Awarded the most influential paper at ISCA 2019. I took a read today (Jul 21, 2019).

- I feels like it’s using the “batch” optimization for all time. The TCC design, kind of combines both cache coherence and memory consistency: how transactions commit or orders, determins the coherence and consistency.

- It seems the load/store speculative execution used in their context is so similar to what Dr.Bandwidth said about Intel’s implementation. Basically, the processor might read some data from L1/L2 and continue execution, but there is a chance, that the data is modifed by others, and the L3 caching agent or home agent could decide to revoke it. Once receiving such revoke message, the processor must cancel all executions that use the speculatively read data.

- It mentions couple Thread-Level Speculation papers, I think they should on this topic.

Case Study¶

Intel¶

Misc Facts.

- Intel Caching Agent (Cbox) is per core (or per LLC slice). Intel Home Agent is per memory controller.

- “The LLC coherence engine (CBo) manages the interface between the core and the last level cache (LLC). All core transactions that access the LLC are directed from the core to a CBo via the ring interconnect. The CBo is responsible for managing data delivery from the LLC to the requesting core. It is also responsible for maintaining coherence between the cores within the socket that share the LLC; generating snoops and collecting snoop responses from the local cores when the MESIF protocol requires it.”

- “Every physical memory address in the system is uniquely associated with a single Cbox instance via a proprietary hashing algorithm that is designed to keep the distribution of traffic across the CBox instances relatively uniform for a wide range of possible address patterns.”

- Read more here, chapter 2.3.

- Starting from Intel UPI, Caching Agent and Home Agent are combined as CHA.

- A good discussion about why QPI gradually drop

Source Snoopand solely useHome Snoop.- The motivation is scalability. It turns out the new UPI only supports directory-based protocol.

- This makes sense because 1) inter socket bandwidth is precious, 2) snoop will consume a lot bandwidth.

- Intel UPI is using directory-based home snoop coherency protocol

- To provide sufficient bandwidth, shared caches are typically interleaved by addresses with banks physically distributed across the chip.

A Transaction Breakdown.

Intel does not disclose too much details about their cache coherence implementations. The most valuable information is extracted from uncore PMU manuals, and discussions from Dr. Bandwidth. According to Dr. Bandwidth, the Intel CPU could dynamically adapt its coherence strategy during runtime according to workload. There won’t be one fixed cache coherence implementation, there will be many. It depends on workload which one is used at runtime.

What happens when a core tries to access a cache line? A detailed cache protocl breakdown derived from Dr.Bandwidth’s comment.

- Physical addresses are uniquely hashed into L3 slices. That means each individual physical address belongs to a L3 slice, and also belongs to a home agent.

- Upon L2 miss, it will send requests to corresponding L3 slice. If the L3 slice is in the local socket, the request can be delievered within the same socket. If the L3 slice belongs to another remote socket, the L2 miss request will be sent over QPI/UPI. Also note that the L2 controller will not send snoop requests. (This is answering the question of “why using local memory is faster than remote” from the cache coherence perspective.)

- At L3, when received the request from a L2,

- If it’s in source snoop model, it will send

snoop messagesto other sockets. - If it’s in home snoop model, it will send

read messageto other sockets. The another socket will generate snoop and collect responses. (R3QPI or home?) - Quote Dr. Bandwidth: Maintaining consistency is easier if the data is sent to the L3 first, and then to the requesting core, but it is also possible to send to both at the same time (e.g., “Direct2Core”). In recent processors, these return paths are chosen dynamically based on undocumented states and settings of the processor.

- I’m not sure who will ACK L2 at last. L3 or home agent? Both are possible.

- If it’s in source snoop model, it will send

- I think both L3 and home agent have directory information. They know where to send snoop/read messages. And both of them can serialize coherence transactions! It’s just undocumented who is doing what.

- In generall, we need to bear the fact that we cannot just figure out how Intel

cache coherence works underlying. We maybe just need to “vaguely” know the fact that:

- Both directory and snoop will be used in combination.

- L3/home agent will serialize conflicting transactions

- L3/home agent will send data to requesting core

- L3/home agent will send final ACK to requesting L2

- A coherence transaction is a multi-step distributed transaction. It involes sending requests, serialize conflicts, receiving responses/ACKs.

Read the discussion posted by Dr. Bandwidth, especially this one on detailed cache coherence flows on a procuder-consumer case, which is essential if you are trying to implement high-performance spinlocks and concurrency data structures.

AMD¶

ARM¶

- AMBA CHI Specifications

- This is probabaly the most comprehensive document I’ve ever seen about cache coherence. Although terms used by ARM differs from the ones used by Intel, still, you can map them. Chapter 5 Interconnect Protocol Flows has a lot timing diagrams regarding read/write/atomic coherence transactions.

- It’s a good reference to know, but it would be hard to actually understand the details.

OpenCAPI and CCIX¶

CXL¶

Like OpenCAPI and CCIX, it builds on top of PCIe. It is intra-server cache coherence protocol.

Not very exciting to me. But it does receive some spotlight recently.

OpenPiton¶

- OpenPiton Microarchitecture Specification

- Directory-based MESI

- This spec has detailed coherence message packet format and type. Unfortunately, it does not say anything about how they deal with coherence transaction conflicts. E.g., some timeline diagrams like Figrue ⅔ in this paper.

- BYOC: A “Bring Your Own Core” Framework for Heterogeneous-ISA Research, ASPLOS‘20

FPGA¶

- Analysis and Optimization of I/O Cache Coherency Strategies for SoC-FPGA Device, FPL‘19

- LEAP Shared Memories: Automating the Construction of FPGA Coherent Memories, FCCM‘14.

- This work is built on their earlier work, which basically add the data caching concept to FPGA: using BRAM as L1, on-board DRAM as L2, host or remote DRAM as L3.

- In their earlier work, each FPGA application (or bitstream) has a private L1 cache.

- In this work, the add MESI coherence to these private L1 caches, as in they can make multiple L1 cache cache-coherent.

- The techniques and protocols from this paper are similar to the exisiting ones. For example, 1) they use a global serializing point to serialize transactions, 2) they designed a lot messaging types such as INV, RESP and so on.

- VMware Research Project PBerry

- A very interesting and promising project.

- They have this follow up work “Rethinking software runtimes for disaggregated memory, ASPLOS‘21”. But it is simulation using the HotOS paper idea. The real FPGA has not been built yet. They need to know Intel’s protocol to build it, which is.. impossible? I think it is probably better to use RISC-V.

- Intel FPGA PAC

- Intel itself is building a FPGA-CPU cache coherent setting. They use the Intel UPI interconnect to natually the spectrum. The FPGA shell has some modules to handle this.

-

- Maybe the ASPLOS‘20 Optimus paper is uing CCIP-related research platform?

-

Also some pointer chasing related stuff

Formal Verification¶

I remember there are couple works from Princiton doing cache protocol verification.